Class 10 AI Unit 3 Evaluating Models – Model Evaluation and Train-test split Notes with Worksheet

Introduction

Till here you learnt about the 4 stages of the AI project cycle – Problem Scoping, Data Acquisition, Data Exploration, and Modelling.

In modelling you can make different types of AI models. But it is required to check if the model is working as per the requirements or not.

In the Evaluation stage, we will explore different methods of evaluating an AI model. Model Evaluation is an integral part of the model development process. It helps to find the best model that represents our data and how well the chosen model will work in the future.

3.1 Importance of Model Evaluation

What is Evaluation or Model Evaluation?

Model evaluation is the process of using different evaluation metrics to understand a machine learning model’s performance.

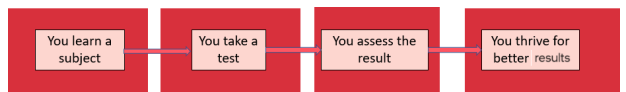

- An AI model gets better with constructive feedback.

- You build a model, get feedback from metrics, make improvements and continue until you achieve a desirable accuracy.

- Feedback Loop

- It’s like the report card of your school.

- In the same way AI Model also evaluated –

Need of Model Evaluation

Model evaluation helps you understand its strengths, weaknesses, and suitability for the task at hand. This feedback loop is essential for building trustworthy and reliable AI systems.

3.2 Splitting the training set data for Evaluation (Train-Test Split Evaluation)

What is Train-Test Split?

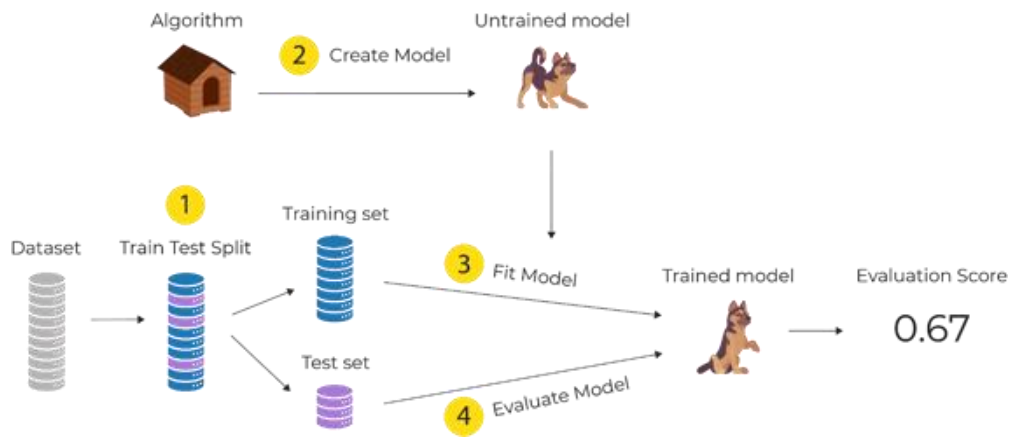

Train test split is a model validation procedure that allows you to simulate how a model would perform on new/unseen data.

The train-test split is a technique for evaluating the performance of a machine learning algorithm. It can be used for any supervised learning algorithm.

- The procedure involves taking a dataset and dividing it into two subsets:

- The training dataset : The training set is data used to train the model.

- The testing dataset: The testing set data (which is new to the model) is used to test the model’s performance and accuracy.

- The train-test procedure is appropriate when there is a sufficiently large dataset available.

Why do we need to do a Train-Test Split?

The train dataset is used to make the model learn. The input elements of the test dataset are provided to the trained model. The model makes predictions, and the predicted values are compared to the expected values.

The main objective of Train-Test split model evaluation is to estimate the performance of the machine learning model on new data i.e. the data not used to train the model.

- Fit the untrained model on available data with known inputs and outputs, then make predictions on new examples in the future where we do not have the expected output or target values.

Overfitting:

Overfitting is when a machine learning model learns the training data too well, including its noise and details, instead of just the general patterns. As a result, the model performs very well on the training data but gives poor results on new or unseen data.

- It’s not recommended to use the same data we used to build the model to evaluate it. This is because our model will simply remember the whole training set, and will therefore always predict the correct label for any point in the training set. This is known as overfitting.

👉 In short: Overfitting = good on training data, bad on new data.

Model Evaluation and Train–Test Split Worksheet (With Answers)

Section A: Multiple Choice Questions

- Model Evaluation is mainly used to

a) Collect data

b) Improve hardware

c) Measure model performance

d) Write algorithms

Answer: c) Measure model performance - Model Evaluation is performed after which stage of the AI Project Cycle?

a) Data Acquisition

b) Problem Scoping

c) Modelling

d) Deployment

Answer: c) Modelling - Model Evaluation helps in finding

a) Dataset size

b) Best performing model

c) Storage requirement

d) Programming language

Answer: b) Best performing model - Feedback in model evaluation is similar to

a) Attendance register

b) School report card

c) Exam syllabus

d) Homework

Answer: b) School report card - Train-Test Split is mainly used to

a) Store data

b) Increase data size

c) Evaluate model performance

d) Remove noise

Answer: c) Evaluate model performance - Train-Test Split is applicable for

a) Unsupervised learning only

b) Supervised learning

c) Reinforcement learning only

d) Hardware models

Answer: b) Supervised learning - Which dataset is used to train the model?

a) Validation dataset

b) Testing dataset

c) Training dataset

d) Random dataset

Answer: c) Training dataset - The testing dataset contains data that is

a) Already memorized

b) New to the model

c) Incorrect

d) Deleted

Answer: b) New to the model - Overfitting occurs when a model

a) Learns general patterns

b) Ignores training data

c) Learns training data too well

d) Uses less data

Answer: c) Learns training data too well - A model suffering from overfitting performs

a) Poorly on training data

b) Well on new data

c) Well on training data but poorly on new data

d) Equally on all data

Answer: c) Well on training data but poorly on new data

Section B: Assertion and Reason

- Assertion: Model evaluation is an important part of model development.

Reason: It helps understand how well a model will perform in the future.

Answer: Both Assertion and Reason are true and Reason correctly explains the Assertion. - Assertion: Testing data is used to train the model.

Reason: Testing data is new and unseen by the model.

Answer: Assertion is false and Reason is true. - Assertion: Feedback improves AI models.

Reason: Feedback helps in making improvements until desired accuracy is achieved.

Answer: Both Assertion and Reason are true and Reason correctly explains the Assertion. - Assertion: Using the same data for training and evaluation is recommended.

Reason: It leads to overfitting.

Answer: Assertion is false and Reason is true. - Assertion: Train-Test split estimates performance on unseen data.

Reason: The model is tested using new data.

Answer: Both Assertion and Reason are true and Reason correctly explains the Assertion.

Section C: Fill in the Blanks

- Model evaluation checks whether the model meets the __________.

Answer: requirements - Model evaluation uses different __________ to judge performance.

Answer: evaluation metrics - Feedback helps in __________ the model.

Answer: improving - Train-Test Split is a __________ technique.

Answer: model validation - The dataset is divided into __________ and testing sets.

Answer: training - The training dataset helps the model to __________.

Answer: learn - Testing data is __________ to the model.

Answer: new - Overfitting occurs when a model learns __________ instead of patterns.

Answer: noise - Overfitting gives poor results on __________ data.

Answer: unseen - Using the same data for training and testing leads to __________.

Answer: overfitting

Section D: Short Answer Questions (30 words)

- What is Model Evaluation?

Answer: Model evaluation is the process of using evaluation metrics to measure the performance of a machine learning model and check how well it works on future data. - Why is model evaluation important?

Answer: Model evaluation helps identify strengths, weaknesses, and reliability of a model, ensuring it performs well on unseen data. - Explain feedback loop in AI models.

Answer: A feedback loop involves building a model, evaluating it using metrics, improving it, and repeating the process until desired accuracy is achieved. - What is Train-Test Split?

Answer: Train-Test Split is a validation method where data is divided into training and testing sets to evaluate model performance. - Why do we use testing data?

Answer: Testing data is used to check how well a trained model performs on new and unseen data.

Section E: Long Answer Questions (80 Words)

- Explain the importance of Model Evaluation.

Answer: Model evaluation is essential to understand how accurately an AI model performs and whether it meets requirements. It helps identify strengths and weaknesses of the model and ensures reliability on unseen data. Through evaluation metrics and feedback, improvements can be made continuously. Without evaluation, a model may appear accurate but fail in real-world scenarios. - Describe the Train-Test Split Evaluation method.

Answer: Train-Test Split is a model validation technique where the dataset is divided into two parts. The training dataset is used to train the model, while the testing dataset is used to evaluate its performance. This method helps estimate how well the model will perform on unseen data and is effective when large datasets are available. - Explain overfitting in machine learning.

Answer: Overfitting occurs when a model learns training data too well, including noise and unnecessary details. As a result, it performs excellently on training data but poorly on new data. This happens when the same dataset is used for training and evaluation, causing the model to memorize instead of generalize.

Section F: Competency / Case-Based Questions

- A student evaluates an AI model using the same data that was used for training. The model shows very high accuracy. Is this reliable? Why?

Answer: No, this is not reliable because using the same data causes overfitting. The model memorizes training data and fails on new data. - An AI model performs well during training but fails during real-world use. Identify the issue.

Answer: The model is likely suffering from overfitting. - A teacher wants to check how a model performs on unseen data. Which evaluation method should be used?

Answer: Train-Test Split evaluation should be used. - Why is feedback important in model development?

Answer: Feedback helps improve the model by identifying errors and refining it until acceptable accuracy is achieved. - Why should testing data be kept separate from training data?

Answer: Separate testing data ensures fair evaluation and helps measure model performance on unseen data.

By Anjeev Kr Singh – Computer Science Educator

Published on : February 5, 2026 | Updated on : February 8, 2026